Google Cloud Monitoring with SecOps: What you need to know

Keeping an eye on your Google Cloud Platform (GCP) setup is important to ensure everything’s running smoothly and securely. By using the right Cloud Audit logging methods, you can stay on top of your infrastructure using SecOps while catching and tackling potential issues. This is not a comprehensive guide to all things GCP Logging but it is a guide to get you started and the gotchas we discovered along the way. Let’s break down logging options, how to set them up, and the good and not-so-good things about each.

GCP High-Level Overview

Before we begin, please refer to the GCP logging diagram, which illustrates some of the concepts we will cover. We will touch on Log Sinks, Cloud Storage, Pub/Sub, and how these logs are ingested into Google SecOps. For deeper insights into GCP routing and storage options, please refer to the following link: https://cloud.google.com/logging/docs/routing/overview.

Enable Logging and What Logs Should You Monitor?

To provide the best visibility into your GCP environment, it is recommended to monitor both the GCP infrastructure (Cloud Audit Logs) and any logs from hosts deployed within the infrastructure. However, you should be aware that certain auditing features are not enabled by default, nor do they always align with expectations.

Cloud Audit Logs Configuration: These include logs for Admin Activity and Data Access. As you may have learned from our webinar or other sources, compromised credentials or misconfigured storage buckets (GCS) are major sources of compromise if not properly configured. You would immediately think one should capture and monitor those logs to gain visibility. You might also assume that this logging would be enabled by default, but it is not.

By default, admin activity audit logs—including configuration changes, updates, or the creation of GCP resources—are logged to a storage bucket (_Default). However, data access logging for services like Google Cloud Storage must be explicitly enabled and configured. In fact, the only service with data access logs configured by default is BigQuery.

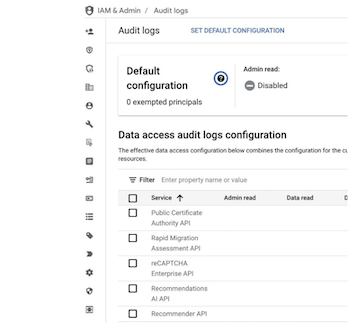

Project Defaults are Disabled

To monitor your GCS buckets, you will need to enable Data Access logs for Google Cloud Storage. We recommend enabling Admin, Data Read, and Data Write logs for complete visibility. Keep in mind that this setting is project-specific and must be enabled for each GCP project you want to monitor. Alternatively, your GCP environment can be configured to use folder/organizational-level policy inheritance for logging (more details here: https://cloud.google.com/iam/docs/resource-hierarchy-access-control).

Once enabled, GCS logging is searchable using Google’s Log Explorer in the GCP Console. You can also enable all Data Access APIs if desired, but be aware that there are costs associated with this.

Enable Logging for Google Cloud Storage

Once enabled, Log Explorer is useful for validating whether the logs are being generated. In the 'How to Ingest Logs' section, we will explain how to get those logs into SecOps.

Next, let’s discuss logging for VM instances in your GCP environment.

2. Google Ops Agent: For each host in your GCP environment, we highly recommend installing the Google Ops Agent on each VM instance. It’s a game changer for:

Capturing logs from applications like audit.log, Apache, and virtually anything that logs to syslog

Filling in gaps that GCP Audit Logs might miss

Why it’s great: It provides visibility into what's happening on your VMs and adds an extra layer of security.

What to watch out for: It requires more effort to set up.

There are multiple ways to deploy the Google Ops Agent. You can install it manually on each host, or use tools like Terraform (or your preferred Infrastructure as Code solution) to deploy it automatically when provisioning your hosts. We chose to use Terraform as it automates many steps that don’t need to be repeated, saving time.

For a detailed how-to on the Google Ops Agent, refer to this link: HOWTO install

As part of our Terraform template, we included a few lines from the HOWTO guide to install the agent during VM instance provisioning.

curl -sSO https://dl.google.com/cloudagents/add-google-cloud-ops-agent-repo.sh

sudo bash add-google-cloud-ops-agent-repo.sh --also-install

The curious thing was that once it was installed, we noticed there was no default Google Ops Agent (GOA) configuration. We had to create the following GOA configuration file based on Google’s documentation and modify it to include the logs we wanted to capture for each host. In this case, we wanted to capture auth.log. This configuration file is placed under /etc/google-cloud-ops-agent/config.yaml on Linux.

logging:

receivers:

syslog:

type: files

include_paths:

- /var/log/messages

- /var/log/syslog

- /var/log/auth.log

service:

pipelines:

default_pipeline:

receivers: [syslog]

metrics:

receivers:

hostmetrics:

type: hostmetrics

collection_interval: 60s

processors:

metrics_filter:

type: exclude_metrics

metrics_pattern: []

service:

pipelines:

default_pipeline:

receivers: [hostmetrics]

processors: [metrics_filter]

How to Ingest Logs into SecOps

Now that you have enabled Cloud Audit logging and VM instance/host-based logging in your GCP project, it’s time to get those logs into SecOps. However, this is not as straightforward as you might assume. There are several options to consider, and the one you choose will depend on your specific use case. For our detection project, we considered using GCS and Pub/Sub collection methods. In order to send logs generated by GCP, you will need to create a Sink.

Creating a Sink

The first step is to configure a Log Router that sends your chosen logs to their destination. You can find this in your GCP Console under Logging > Configure Log Router > Create Sink. For our purposes, we focused on GCS and Pub/Sub, as these are highly compatible with Google SecOps.

There are two main integration options compatible with Google SecOps: GCS and Pub/Sub. When you create a Sink, as highlighted above, you will be able to define the destination. If you have batch logging from some devices or use cases that are not mission-critical, GCS is a suitable option. However, if you need time-sensitive detections and responses, Google Pub/Sub is the better choice.

Google SecOps Configuration

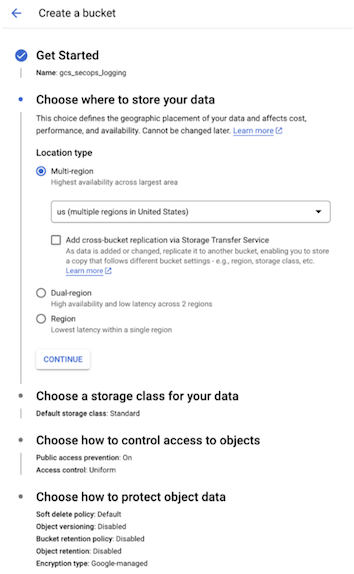

Google Cloud Storage (GCS)

If you plan to use GCS, you must first create a destination bucket for the Sink to send its logs. Please note that Google SecOps relies on a one-to-one mapping of a single bucket to a single log type, which SecOps uses to monitor, ingest, and parse. In other words, you can only have one type of log per bucket. In our environment, we created a unique bucket specifically for GCS audit logs, one for firewall logs, and another for Google Ops Agent logs. For our example, we created the following bucket references:

gs://gcs_secops_logging

gs://fw_secops_logging

gs://host_secops_logging

GCS Logging Bucket example

Since you have to have separate buckets for each log_type, you must create a unique Sink for each to route the logs to the appropriate corresponding GCS bucket.

Now that you have enabled logging and configured a place to store your logs, it’s time to ingest them into Google SecOps!

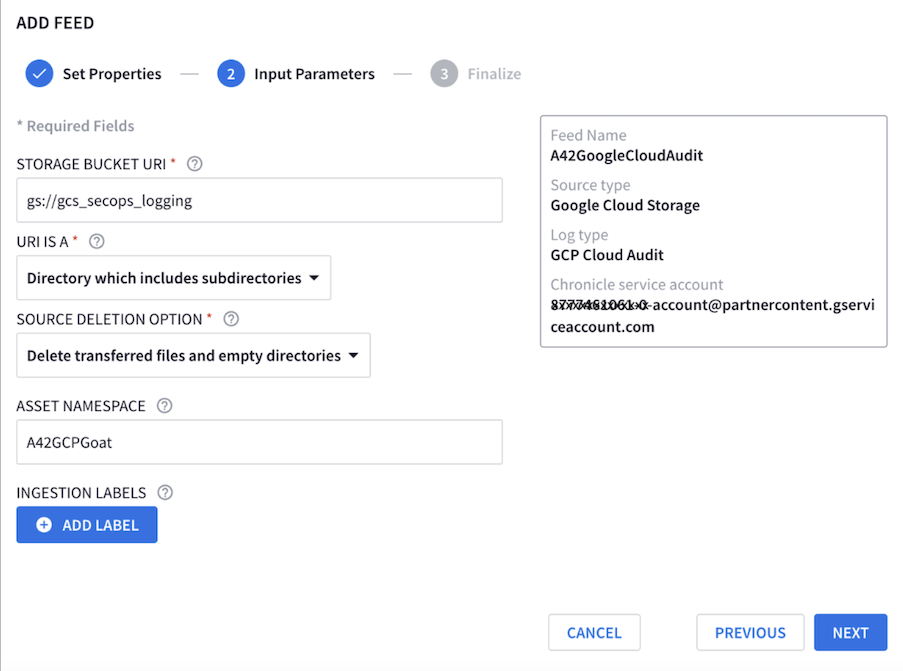

In Google SecOps, navigate to Settings > Feeds. Create a feed with the source type set to Google Cloud Storage. You will then need to configure the parameters of your GCS bucket.

An important part of this step is to obtain your Chronicle Service Account name. This Service Account will need permissions to manage the logs in your GCP bucket. In our example, we used Terraform to create a new GCP role that maps to the Chronicle Service Account, allowing it to manage the logs placed into the logging bucket. Below are the permissions we used for our lab. Note that you can also create the role manually in GCP and assign the same permissions.

role_id = "secopsbucketmgr"

project = google_project.my_project.project_id

title = "SecOps GCS Reader"

description = "Used read/manage gcs based logs in buckets"

permissions = ["storage.folders.create",

"storage.folders.delete",

"storage.folders.get",

"storage.folders.list",

"storage.folders.rename",

"storage.managedFolders.get",

"storage.managedFolders.list",

"storage.multipartUploads.abort",

"storage.multipartUploads.create",

"storage.multipartUploads.list",

"storage.multipartUploads.listParts",

"storage.objects.create",

"storage.objects.delete",

"storage.objects.get",

"storage.objects.list",

"storage.objects.update"]

The final step is to configure the storage bucket to pull the logs and determine how Google SecOps will manage them.

Google SecOps Feed Management Configuration Options

Once this is complete, you should start seeing your logs in Google SecOps.

Google Pub/Sub

As mentioned earlier, logs that need to be monitored in near real time will need to be collected in an alternative method. Google Pub/Sub is the best mechanism to meet this requirement. Similar to GCS, you will need to create a unique Pub/Sub destination for each log type.

First, navigate to your GCP Console and create a Topic. In our example, we created topics to represent our required log types: Cloud Audit Logs, Firewall Logs, and Host-based Logs (see below).

Example of topics for Pub/Sub collection

Next, create a Sink as you did with GCS, but this time, select Cloud Pub/Sub as the destination and choose the corresponding log topic. (See above.)

Finally, in Google SecOps Feeds, create a feed based on the designated topics.

Google SecOps Pub/Sub Feed configuration example

Once the feed is created, copy the Endpoint Information, as you will need it to create a subscription.

In GCP Cloud, create a subscription, assign it a unique ID, select the matching topic you created earlier, choose Push, and paste the Endpoint Information from Google SecOps.

Google Cloud Subscription Example

Now, you should have successfully configured Google SecOps to collect GCP logs via Google Pub/Sub!

By setting up the logging methods in this blog, you’ll have more visibility and insights in Google SecOps to keep your GCP environment secure and running like a well-oiled machine. Happy monitoring!

The following is a summary of both log collection methods in SecOps.

Summary of Logging Methods

Other GCP Audit Considerations

IAM Audit Logs

Capturing Identity and Access Management (IAM) Logs is crucial for visibility into identity and access activities. However, these logs are not real-time—we observed delays ranging from a few hours to a full day. This is not due to Google SecOps or Log Sink mechanisms but rather how GCP inherently logs IAM events. This delay makes IAM logs less suitable for real-time monitoring.

Public Objects Monitoring

Another key challenge is monitoring public objects, such as anonymous GCS buckets, which are not logged by default. To enable logging for these objects, you must complete the following steps:

Enable Native Bucket logging to GCS

Create destination gcs bucket (iam_gcp_log)

Enable logging on the bucket you wish to monitor

Commands:

Enable logging: gcloud storage buckets update gs://my_public_bucket --log-bucket=gs://iam_gcp_logs

Remove logging: gs://iam_gcp_log --clear-log-bucketclear

Why Enable Public Object Logging?

Enabling logging for public buckets provides visibility into public bucket activity, helping you monitor access and potential security risks.

Challenges to Consider:

Log delays – Logs can take 1 hour to 1 day to appear.

Log format inconsistencies – Logs are generated in CSV format instead of JSON, requiring a custom parser for proper analysis.

Limited administrative insights – These logs do not provide any administrative management data.

Encryption blind spots – If you use object-level encryption, GCP does not log or alert on these activities. This is a critical gap, especially in light of recent ransomware attacks targeting AWS S3, where similar blind spots were exploited.